A new smartphone function is being used to detect the user’s emotions and help him overcome stressful situations. The innovation is the result of the work of researchers at the Institute of Mathematical and Computer Sciences (ICMC-USP) in São Carlos, together with the Federal University of São Carlos (UFSCar) and the University of Arizona. In order to detect the signals that indicate changes in emotions in certain circumstances, the researchers used the sensors in devices such as computer and smartphone cameras (e.g. to capture facial expressions) and intelligent wristbands and watches that allow monitoring of heart function, sleep quality, the number of heartbeats, steps taken, and calories consumed and expended daily by the user.

A new smartphone function is being used to detect the user’s emotions and help him overcome stressful situations. The innovation is the result of the work of researchers at the Institute of Mathematical and Computer Sciences (ICMC-USP) in São Carlos, together with the Federal University of São Carlos (UFSCar) and the University of Arizona. In order to detect the signals that indicate changes in emotions in certain circumstances, the researchers used the sensors in devices such as computer and smartphone cameras (e.g. to capture facial expressions) and intelligent wristbands and watches that allow monitoring of heart function, sleep quality, the number of heartbeats, steps taken, and calories consumed and expended daily by the user.

“The innovative aspect of our research is that we use various sensors to measure the user’s wellness and stress levels,” says project coordinator Professor Jó Ueyama, from USP. Since most of these sensors are wireless, we can synchronize them with other devices and transfer the data obtained to other platforms, such as smartphones.

Based on the information collected, the researchers began to establish parameters in order to develop a sequence of commands (algorithms) that would be run on a computer or smartphone. When the system is ready, these devices will be able to identify the user’s emotions, interpret them in real time, and react intelligently, suggesting actions to change an undesirable emotional state, for example, or reinforce a desired one. “Our intent is to detect the emotion so that, in the case of a stressful situation at work, for example, the user can take a break to change his emotional state,” he says. The device can then suggest that the user listen to a song, play a game, or watch a video on YouTube. The suggestions will take into account the profile and interests of the user. Among possible system applications is use by professionals who work in high-risk environments, such as nuclear reactors, oil platforms and space stations. “By monitoring the stress levels of these individuals, we would be ensuring that fewer errors will be committed and reducing the possibility of accidents,” adds Ueyama.

The development of the computational tool, based on algorithms, is a very complex task. “We need to teach the computer the meaning of each of the signals received by the sensors,” he says. “If a change in heart rate is more indicative of a change in the emotional state than a change in facial expression, we have to weigh these parameters differently in order to place more emphasis on a given personal characteristic.” The time it takes to carry out a task at work, the number of pauses and the number of times the same action is repeated can also indicate an altered emotional state, according to the researchers.

Assessed behavior

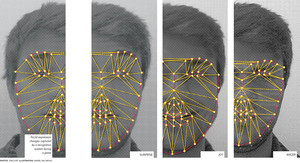

In order to establish patterns for emotional responses, some experiments were carried out with student participants and psychologist assessments. In one of the experiments, the behaviors of USP and UFSCar students were evaluated while they played the classic game Super Mario Brothers. Each student played for about 10 minutes. During this period, heart rate was measured, facial expressions were filmed, and all actions taken using the remote control were monitored.

Four psychologists watched the footage in order to assess the times at which there was a significant variation in the data collected by the sensors. In order to classify the emotions independently, they did not have prior knowledge of the information collected by the sensors.

The information was then cross-referenced in order to determine if the facial expressions corresponded to the data collected. The experiment with the game was conducted at UFSCar, under the coordination of Professor Vânia Neris in the Computer Science Department, who works on the part of the project that involves human-computer interaction. At the University of Arizona, the Brazilian professor Thienne Johnson is responsible for the project and, since October, has been supervising one of Ueyama’s students on a doctoral exchange program. “His task is using smartphones to monitor elderly individuals who take medication periodically.”

“The project began a year and a half years ago when master’s student Vinícius Pereira Gonçalves showed interest in working with emotion sensing,” says Ueyama, who is carrying out research in the area of wireless sensor networks. One of his projects, consisting of wireless sensors to monitor flooding, financed by FAPESP, is being used in São Carlos. “We are monitoring flood locations throughout the city through an agreement with city hall,” says Ueyama, who also works on smartphone programming. The project is scheduled for completion in 2016. Until then, more experiments will be carried out at partner institutions such as a hospital in Marilia, São Paulo State, and at the University of Arizona. Among the various possible applications of the project in hospitals is the development of applications to aid in the recovery of patients or drug addicts.

Project

Exploiting the sensor web and participatory sensing approaches for urban river monitoring (nº 2012/22550-0); Grant Mechanism – Regular research project; Principal investigator Jó Ueyama (USP); Investment R$102,046.64 (FAPESP).