Physicist and biologist Uri Alon is a researcher at the Weizmann Institute of Science in Israel. In 2009 he published an article in Molecular Cell, in which he suggests a set of factors that researchers and students should consider when selecting a research problem. In a few pages, and with a tinge of self-help, he makes recommendations for those who are just beginning their careers, such as a three-month thought process before committing to a problem, or attempting to identify among emerging issues in their field of research the one that sparks the most personal interest. The work was not mentioned extensively in other articles. There were just 14 citations according to the Web of Science database by Thomson Reuters. However, and unexpectedly, it had a considerable impact. It is one of the most popular works on Mendeley, an academic social network on which users can store and share articles in their profiles and learn which papers are generating interest among other researchers.

Physicist and biologist Uri Alon is a researcher at the Weizmann Institute of Science in Israel. In 2009 he published an article in Molecular Cell, in which he suggests a set of factors that researchers and students should consider when selecting a research problem. In a few pages, and with a tinge of self-help, he makes recommendations for those who are just beginning their careers, such as a three-month thought process before committing to a problem, or attempting to identify among emerging issues in their field of research the one that sparks the most personal interest. The work was not mentioned extensively in other articles. There were just 14 citations according to the Web of Science database by Thomson Reuters. However, and unexpectedly, it had a considerable impact. It is one of the most popular works on Mendeley, an academic social network on which users can store and share articles in their profiles and learn which papers are generating interest among other researchers.

On Mendeley, 130,000 people have already downloaded Alon’s essay. There was a similar situation with US President Barack Obama. In July 2016 he authored an article on the reform of the US health system in the Journal of the American Medical Association (JAMA). Only seven citations were received in academic works, but the article was mentioned in more than 8,000 Twitter posts and 197 Facebook pages. “These cases indicate that the way that science is published and disseminated is changing with the expansion of social media,” says biologist Atila Iamarino, one of the developers of the ScienceBlogs Brasil network.

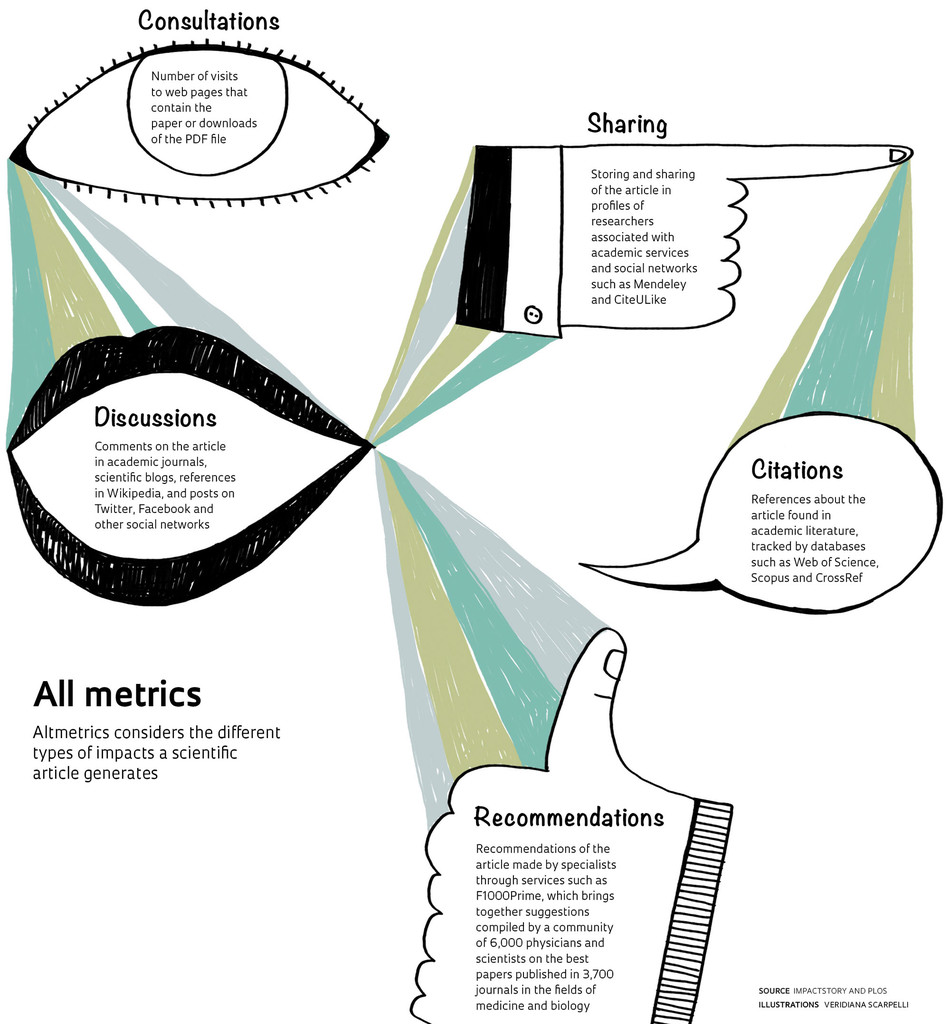

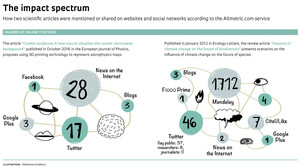

The number of citations an article receives in other papers or a publication’s impact factor are recognized parameters that are used to assess the importance of scientific production. In recent years, however, new indicators have sprung up that record how science resonates with varied audiences. This trend was a real boost for altmetrics (alternative metrics), a branch of scientometrics that measures the influence of scientific production by analyzing mentions on websites, social networks, the number of downloads, and shared scientific PowerPoint Presentations, to name a few examples.

The term was proposed for the first time in a September 2010 tweet by Jason Priem, who at the time was earning his PhD in information science at the University of North Carolina in Chapel Hill. He was one of the developers of ImpactStory, an open code tool that provides altmetrics data. These days a number of services assemble information of this type. Even before the term was coined, in March 2009, the Public Library of Science (PLOS), which publishes open access periodicals, established the PLOS Article Level Metrics (PLOS ALM), a system that uses a variety of indicators, such as usage statistics, academic citations, mentions in blogs or Wikipedia entries and sharing on social media to monitor how papers published in their journals resonate with different audiences.

Other publishers followed suit. In 2013, Elsevier of the Netherlands took over Mendeley, which has five million users today. Recently, it acquired the Social Science Research Network (SSRN), an open access repository in which more than 300,000 social and human science researchers have already disseminated preprints of articles and works that have yet to be published. (see Pesquisa FAPESP Issue nº 245). Both acquisitions sought to increase the publisher’s digital market business and provide new indicators to its clients.

Growing interest in alternative metrics led the National Information Standards Organization (NISO) in the United States to write a guide with advice for producing and disseminating alternative metrics. The document, released in February 2016, stressed the importance of producing precise indicators and stipulates that the source of the information as well as the methodology for interpreting it should be transparent. The Wellcome Trust, a foundation that funds biomedical research in the United Kingdom, adopted altmetrics parameters to strengthen the evaluation of the research it funds. A document the foundation published in 2015 shows that these indicators make it possible to monitor the repercussions of a scientific article immediately and to measure the type of impact the research has on the field of health – information that is seldom known. According to the document, early detection of interest on the part of citizens and decision-makers in given research topics helps the Wellcome Trust find linkages between scientific and political agendas. “Many people, such as health professionals, scientific disseminators, students, journalists and public officials read and use scientific articles even though no citations follow,” says Iara Vidal Pereira de Souza, who is working on her PhD at the Graduate Information Science Program of the Brazilian Institute of Science and Technology Information (Ibict) and the School of Communication at the Federal University of Rio de Janeiro.

The new ways of evaluating the influence of research are in no way a replacement for the traditional methods of measuring their importance. Sérgio Salles-Filho, a professor at the Department of Science and Technology Policy of the Institute of Geosciences at Unicamp (DPCT-Unicamp) and assistant coordinator of FAPESP Special Programs, notes that initiatives such as the Wellcome Trust are still infrequent. “In 2009, the United Kingdom adopted a new research evaluation system, the Research Excellence Framework (REF), which is making extensive use of bibliometric indicators such as the number of citations, and it is heavily based on peer evaluation,” he says.

Veridiana ScarpelliA myriad of sources

Veridiana ScarpelliA myriad of sources

Spaniard Rodrigo Costas, a researcher at the Center for Science and Technology Studies (CWTS) at the University of Leiden in the Netherlands, drew attention to the wide variety of sources of indicators for altmetrics. “Mendeley basically manages online bibliographical references that researchers use. The type of interaction we observed in this platform is very different from how content is shared on Twitter and Facebook, which are more appropriate for disseminating research of interest to the general public,” Costas says. In the opinion of Fábio Castro Gouveia, a researcher at the Museum of Life of the Oswaldo Cruz Foundation (Fiocruz), there are slight differences even between Facebook and Twitter that should be taken into consideration. “On Facebook, the research projects that resonate most are those that have popular appeal. Twitter is the network that researchers use most,” he says.

From the standpoint of alternative metrics, Gouveia analyzed the works published by Fiocruz researchers in the journal PLOS One. A total of 416 articles published from 2007 to 2015 were reviewed, using the system developed by Altmetric, a company founded in 2011 by British bioinformatics specialist Euan Adie. The firm offers tools to monitor Internet references to individuals, companies and scientific articles. Also, a control sample of 500 articles published in the same journal was selected at random for comparison. In observing how articles performed on Facebook pages, it was noted that the percentage of mentions on this platform was 15.4% for works by Fiocruz and 14.8% for the control sample. For Twitter, 56.5% of the Fiocruz articles were mentioned at least once, compared to 46.6% of the control sample articles. Just 2.4% of the Fiocruz articles were cited in blogs, compared to 5.4% for the control sample. “The altmetric performance by Fiocruz appears to follow the overall trend. In addition, the number of tweets received was impressive,” Gouveia says. In another study published in February 2016 in Scientometrics, German researchers used Altmetric data to identify the countries with the most articles shared on Twitter. The top three are Denmark, Finland and Norway. Brazil ranks 14th (of a total of 22), ahead of countries such as China, South Korea, India and Japan. According to the authors, Twitter is one of the social networks researchers used most, which is why they propose developing an index specifically to measure the impact of production on this platform.

Recently completed research analyzed the 100 articles with the highest altmetric scores taken from the SciELO database, using the Altmetric tool. Instead of analyzing the data from the articles, João de Melo Maricato, a professor at the University of Brasília (UnB), examined the profiles of individuals who shared works on Facebook and Twitter. The profiles were divided into two groups: academic impact, with individuals who identify themselves as researchers in their profiles; and social impact, i.e. individuals who do not identify themselves as researchers. Maricato observed compelling evidence that the impact measured by altmetrics still concentrates on the relationship among scientists. “Still, it is interesting to observe that nonacademic profiles accounted for 36% of all the work that was done to disseminate articles,” he says.

Another result of the study shows that articles with the highest altmetric score were from the fields of health sciences (57%), followed by applied social sciences (14%), biological sciences (13%), human sciences (11%) and agricultural sciences (5%). According to Maricato, alternative metrics appear to help in evaluating scientific production by researchers who work in fields that do not have a strong tradition of publishing articles in international periodicals, such as applied social and human sciences. “In these areas, researchers publish more in books or chapters of books and tend to concentrate on local or national issues,” Maricato explains.

Point system

Point system

The system Altmetric developed is now one of the most widely used by researchers who are trying to create knowledge based on alternative indicators. The firm developed a point system, the Altmetric Attention Score, which tracks the amount of attention a work receives on different platforms. This is measured by the number of mentions and shares online, and it is weighted using the weight assigned to the profile that disseminates the article on social networks. For example, a mention on the page of a journal with a large circulation receives more points than a tweet.

According to Adie from Altmetrics, obtaining a high score does not necessarily mean that an article’s impact was positive, nor is it evidence of quality. “There are cases of papers that received many comments on social networks because of errors or fraud detected after publication,” he notes. In other cases, an article is broadly disseminated online because the subject being addressed is controversial. In 2014, for example, Philippe Grandjean of Harvard University, and Philip Landrigan, a researcher at the Mount Sinai Medical Center in New York, published a controversial paper in The Lancet that obtained a high score according to the Altmetric methodology. In the article, the authors suggest that humankind is facing a silent pandemic caused by neurotoxins found in everyday products such as cosmetics, that can affect brain development and contribute to increasing the prevalence of diseases such as autism and dyslexia. The media reaction to the paper was one of alarm, and researchers and scientific societies disputed the results.

Alternative metrics are still in the throes of development, but they are already showing how to better measure the impact of science on society. “Altmetrics opens up opportunities to study new perspectives for accessing and disseminating scientific publications on online social platforms, and they reach larger audiences,” concludes researcher Rodrigo Costas.

Republish