Complaints about indiscriminate use of the journal impact factor (JIF) to measure the quality of scientific publications have encouraged the Institute for Scientific Information, which is responsible for calculating the indicator, to examine its processes. At the end of June, the institute, which is a branch of Clarivate Analytics, released its annual edition of the Journal Citation Reports, which presents the JIF of 11,655 scientific journals worldwide. This year, instead of only publishing the traditional ranking based on the average number of citations received by each journal over a two-year period, the database also includes other information that gives context to how the index was determined. It is now possible to access supplementary data, such as a distribution curve showing all the articles published in each periodical and how often each was cited.

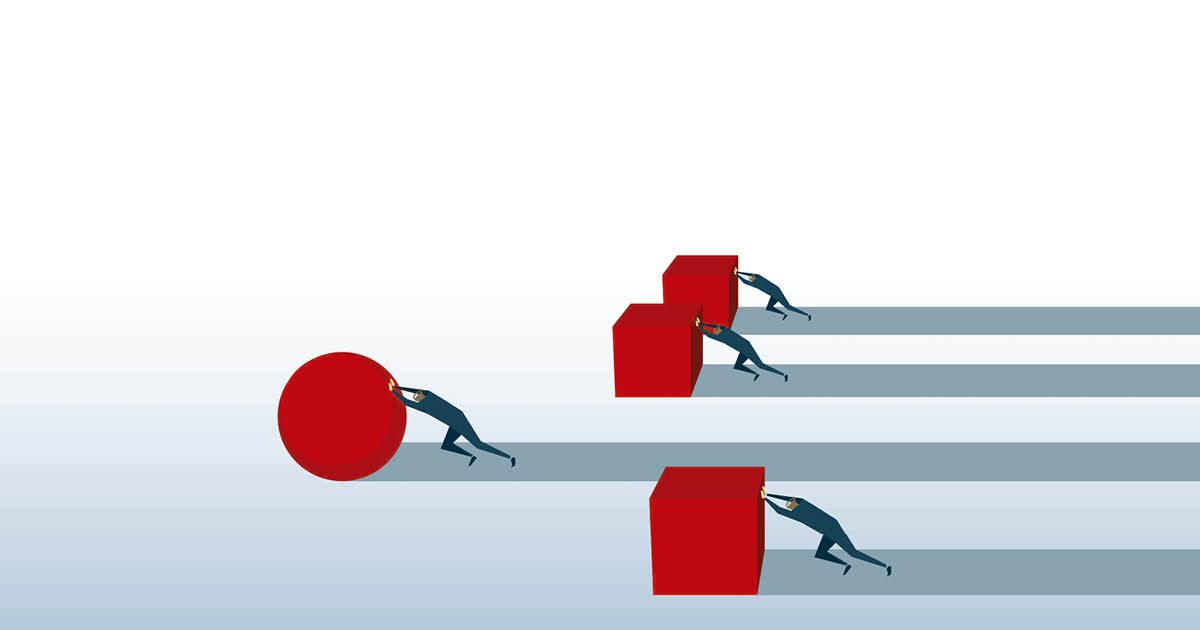

The new approach is a response to criticism that the index, often touted by journals and authors as a measure of prestige, is flawed due to the widely varying citation rates from article to article within a single journal. “The vast majority of scientific journals publish articles of a consistent standard. But those that publish papers of varying impact cause a problem,” says chemist Rogério Meneghini, scientific coordinator of the SciELO online library. The additional information provided this year helps to show if the performance of a publication is tied to a large set of articles—as is the case with Science, Nature, PLOS, and Scientific Reports—or if it is the result of a small number of rigorously selected papers. “Citation distribution graphs were created to offer a way to see into the journal impact factor. Don’t just look at the number, look through the number,” said Marie McVeigh, product director at Clarivate Analytics, on her Twitter profile. The aim of this new approach, she says, is to discourage use of the JIF alone and to show the diversity of data behind the index.

It is a common mistake to believe that the impact factor of a journal is representative of individual articles or authors

Other detailed information was also made available, including the median number of citations, which unlike the mean, is not influenced by extreme outliers. Another new element is the distinction between citations made in research articles, which reflect impact among peers, and those in review articles, which summarize previously published studies on a topic.

In an article published on the Times Higher Education website, Jonathan Adams, director of the Institute for Scientific Information, says the new system is part of an effort to promote “responsible metrics,” suggesting that those responsible for other indicators should also strive to reveal the context in which they are produced. He mentioned the h-index as an example, which has also been criticized regarding indiscriminate use without taking into account the field of knowledge and length of the researcher’s career (see Pesquisa FAPESP issue no. 207). “We have made a firm move to increase every research assessor’s ability to make the responsible choices for their specific requirements. We and other data organizations will need to work to support the responsible use of metrics that world-class research deserves,” Adams said.

First published 44 years ago, the JIF has established itself as the most important indicator of journal influence, guiding the strategies adopted by publishers and enticing authors desperate to increase the visibility of their work. At the same time, it has caused misunderstandings. The most common is when researchers take the indicator, which ranks the average performance of a journal, as representative of the individual quality or originality of all its articles, when in actual fact, their individual merit is at best limited to how rigorously the periodical evaluates submissions. Another misleading perception is that publishing a paper in a high-impact journal guarantees a high number of citations. “People put a lot of stock in where an article was published—for which the journal impact factor is an important indicator—and very little in how much each paper contributes to the advancement of scientific knowledge,” says molecular biologist Adeilton Brandão, coeditor of Memórias do Instituto Oswaldo Cruz, a scientific periodical founded in 1907 that was ranked 2nd among Brazilian journals by the most recent Journal Citation Reports, with a JIF of 2.833. This means that on average, the roughly 240 articles it published in the last two years were cited in other journals just over 2.8 times each in 2017 (see table). “Most of us don’t monitor what happens to our articles after they are published. It is like the researcher’s work culminates in the moment when the results are published in a respectable journal, when actually this is only the beginning of the journey.” Brandão notes that Brazilian journals often have difficulty attracting articles with high impact potential. “The Brazilian research structure is heavily weighted toward graduate studies, where programs are strongly encouraged by advisory bodies to publish their results in foreign journals. It is difficult for our own periodicals to overcome this disadvantage,” he says.

The quest to achieve a high JIF at any price can lead to ethical conflicts. It is not uncommon for publishers to attempt to manipulate the index. Two common examples include self-citation, when a journal encourages articles to cite other papers that it has published, or cross-citation, a mutual agreement between the editors of two journals to cite each other’s articles. These are risky strategies. When it released the latest Journal Citation Reports, Clarivate Analytics also announced the suspension of 20 journals from its lists due to anomalous citation patterns. For two years, these publications will have no JIF. Brazil, which had six journals suspended from the JCR in 2013 (see Pesquisa FAPESP issue no. 213), had no suspensions this year.

For Brazilian publishers, the changes to the JIF report are welcome. “Journal impact factor is not an absolute indicator of the quality of a publication. There are journals that publish important theoretical papers, but they do not have a high JIF because the topics are of interest to few people,” says Marc André Meyers, editor in chief of the Journal of Materials Research and Technology (JMRT), which received the highest JIF in Brazil in 2018, achieving a score of 3.398. Meyers, a professor at the University of California, San Diego, believes that sharing contextual information tends to have little impact on the dynamics of scientific publications. “The JIF is a well-established indicator that attracts good authors, and this is still a central concern for the editors of any good journal. Accepting an article is always an investment with an element of risk. Sometimes the manuscript looks promising, but ends up receiving few citations.” The JMRT, created in 2011 by the Brazilian Association of Metallurgy, Materials, and Mining (ABM), has adopted an aggressive strategy to raise its impact factor, publishing a large number of foreign articles and being highly selective about the papers it accepts (see Pesquisa FAPESP issue no. 263).

For Jean Paul Metzger, a professor at the Institute of Biosciences at the University of São Paulo (USP) and editor in chief of Perspectives in Ecology and Conservation, the JIF of 2.766 obtained by the publication is the result of recent work to increase its visibility—the journal was not even in the top 10 of the 2017 Journal Citation Reports, but appeared in 3rd place in the 2018 list. The journal, which until recently was called Natureza e Conservação (Nature and conservation), took a gamble on a niche field of scientific articles that evaluate public ecology policies. “Some of our most cited papers related to the consequences of the Mariana tragedy, as well as a study on changes made to the native vegetation protection law, known as the Brazilian Forest Code, which was written in accessible language and was even used to support discussions in the Brazilian supreme court. These topics attract the attention of a wider audience, including researchers and decision makers, and appeal to good authors interested in discussing the effects of public policy,” he says. Metzger acknowledges that misuse of the journal impact factor is a problem, but he believes that despite the limitations, the index is still useful. “The number of articles published in all fields of knowledge has been growing exponentially, and we need some way of screening and ranking the best articles. The JIF allows us to more carefully select journals that generally publish impactful articles,” he says.

Republish