Léo ramos

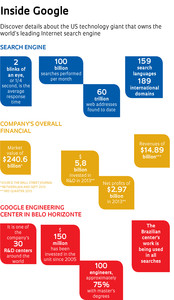

From left, Berthier Ribeiro-Neto, Bruno Pôssas, Paulo Golgher, Bruno Fonseca and Hugo Santana (standing)Léo ramosTwo blinks of an eye—corresponding to a quarter of a second—is the average time that Google, the world’s leading Internet search engine, takes to provide answers to user queries. The search engine receives 100 billion queries per month, an average of 3.3 billion searches per day, 137.5 million per hour and an incredible 2.3 million per minute. More than 20 billion web addresses are analyzed every 24 hours by Google, which is presented with 500 million new queries daily, that is, queries it has never before received. Here in Brazil, the site has a 91% share of the Internet search market. What few know is that, to process so much information, the technology company based in Mountain View, one of the largest cities in Silicon Valley, California, relies on the talent of a team of Brazilian researchers at its Latin American Engineering Center in Belo Horizonte, the capital city of the state of Minas Gerais.

Located in a building in the central region of the state capital, the unit was established in 2005 and is today one of the most important among the company’s 30 research and development centers scattered across the globe in cities like New York, Zurich, Tokyo, and Bangalore (India). “One hundred percent of searches made globally, every day, are better in terms of relevance to the query because of the research developed by the team in Belo Horizonte,” says computer scientist Berthier Ribeiro-Neto, 53, Google engineering director and one of the leaders of the Brazilian team. “We are responsible for the second most important search-improving change in the history of Google. Moreover, five of the 30 major experimental search engine user projects came out of our office,” says the researcher. This second most important innovation—which cannot be described in detail because it is secret—is related to two fundamental problems with search engines: understanding precisely what the user is saying in the query and understanding what each of the documents on the web means. It is the marriage of these two “understandings” that, in the end, make the information presented by the search engine the closest to what the user is looking for.

| Google: |

| Latin American Engineering Center Belo Horizonte, MG |

| No. of Employees: |

| 500 |

| Principal products: |

| Internet search engine, Gmail and the social network G+ |

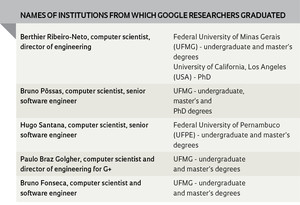

The main area of expertise of the researchers in Belo Horizonte is core ranking, which is the order in which the links are displayed on the results page. “Our group’s focus is search quality. We work to ensure that the response to the user’s query is the best possible, and that the first response in the ranking presented by Google is indeed what the user is looking for,” says computer scientist Hugo Pimentel de Santana, 32. “One of our biggest efforts is trying to understand that certain queries, although written differently, represent the same user intent,” he says. With an undergraduate degree from the Federal University of Pernambuco (UFPE) and a Master’s from the same institution in the field of artificial intelligence, Santana has been a software engineer at Google for six years and leads the 16-member ranking team. He travels to Mountain View two to three times a year for training and to attend meetings with the company’s global team. Google’s headquarters is the site of the principal search engine center.

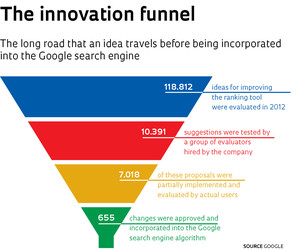

The challenge to improve core ranking is great and every suggestion goes through a long and meticulous evaluation process. In 2012 alone, 118,812 ideas to make the ranking tool more effective were presented by the company’s engineers worldwide. Of this total, less than 10% (10,391) were analyzed by a user group hired by Google, the raters. About 30% of these implementations were discarded and 7,018 continued on to the next phase, partial deployment, where they were reviewed by groups of actual users. At the end of the process, only 665 changes were approved and incorporated into the Google search engine.

Léo RamosRelaxation and work in the Minas Gerais and São Paulo officesLéo Ramos

One indication of the importance of the Belo Horizonte Engineering Center is that some of their researchers have full access to Google’s search algorithm, the huge code that makes the engine run. The algorithm is highly confidential and has the same importance for Google as the formula for Coca-Cola does for that beverage company—it is the foundation of its success. “Few groups outside headquarters in Mountain View are working on improving the search algorithm,” says Bruno Pôssas. At 36, with undergraduate, master’s and PhD degrees in computer science from the Federal University of Minas Gerais (UFMG), Pôssas is responsible for all improvements to the Google algorithm proposed by the team in Belo Horizonte.

According to Pôssas, the knowledge of the Brazilian researchers in a theoretical area of fundamental importance for building search engines, known as “information retrieval,” explains their good reputation with the head office in Silicon Valley. “Google started in Belo Horizonte with a very good information retrieval group recognized by the international scientific community for the quality of its published articles,” says Pôssas. He is referring to the team that, in 2000, founded the company Akwan Information Technologies, which was acquired by Google five years later and became its Brazilian research center.

Search engine

Search engine

Akwan owned a search engine that was focused on the Brazilian web, called TodoBR, which had been developed by a group of professors from the UFMG Computer Science Department. “TodoBR was much better than the Google search engine for Brazil at the time. Before long, our search engine caught on and we decided to open a company,” recalls Ribeiro-Neto, one of the six founders of Akwan. The others were professors Nívio Ziviani, Alberto Laender and Ivan Moura Campos, all from UFMG, and market investors Guilherme Emrich and Marcus Regueira. When the company grew, Ribeiro-Neto decided to take a leave of absence from the university to manage the day-to-day activities of the business.

“Our initial difficulty was a lack of low-cost financing. We approached the BNDES (Brazilian Development Bank) and were turned down. We survived by selling solutions to the corporate market in São Paulo until, at the end of 2004, a colleague put us in touch with a vice president of engineering at Google. We signed the deal within months,” says Ribeiro-Neto. Google’s goal when buying Akwan, according to Ribeiro-Neto, was to build a R&D center in Brazil. When the American company acquired the Brazilian company, it canceled all of its contracts, but kept its personnel. “Google realized that that group of academics had developed a cutting-edge tool. We had our own ideas about how to deal with search engine challenges. We were Google’s first global acquisition outside the United States,” says Ribeiro-Neto, noting that “the focus of the group’s work has always been the development of global innovations.” The researcher is the only one of the six Akwan founders who remained at Google.

Since it began operations in 2005, over $150 million has been invested in the Engineering Center. Currently, about 100 engineers work there and 75% have master’s or PhDs in computer science. Most researchers are Brazilian, but there are also professionals from other countries, including the United States, India, Chile, Colombia and Venezuela. “We are looking for proactive, creative engineers with good technical training, who take the initiative,” says Ribeiro-Neto, who is co-author of the book Modern information retrieval. Originally published in 1999, the work is important in computer science and was consulted by the founders of Google, Larry Page and Sergey Brin, during graduate school at Stanford University, when they developed the project that gave rise to the search firm.

The company has more than 500 employees in Brazil, divided between the R&D center in Belo Horizonte and the main office in São Paulo. Employees enjoy a relaxed atmosphere with spaces dedicated to creative leisure, equipped with hammocks, lounges, pool tables, videogames, a comic book library, and beanbags shaped like ctrl, esc, alt and del keys for relaxing.

The company has more than 500 employees in Brazil, divided between the R&D center in Belo Horizonte and the main office in São Paulo. Employees enjoy a relaxed atmosphere with spaces dedicated to creative leisure, equipped with hammocks, lounges, pool tables, videogames, a comic book library, and beanbags shaped like ctrl, esc, alt and del keys for relaxing.

The Belo Horizonte team is also dedicated to researching and developing products for the social network Google+, launched in 2011 to compete with Facebook, and is responsible for managing Orkut, which was once Brazil’s most popular social website. When Google bought Akwan, in 2005, Brazil was Orkut’s leading market. Three years later, the Brazilian subsidiary became responsible for the platform, which grew to have 30 million Brazilian users . “The group I lead has about 40 people and is one of the three most important, along with the teams from Mountain View and Zurich,” says the director of engineering for G+, Paulo Golgher, 36. The daily tasks of engineers include creating new features for Google+ and developing programs that make the social network more secure and keep it beyond the reach of hackers. “We designed automated systems so that the platform itself detects threats and abuse, such as pornographic content, viruses and spam,” says software engineer Bruno Maciel Fonseca, 32.

Academic research

Besides investing in innovations intended for its own products, Google also funds academic projects in Brazilian universities. The Google Brazil Focused Research Grants program, launched in 2013, distributes about R$1 million to five doctoral research projects aimed at finding out how people behave in the virtual environment of the Internet. The company has a tradition of promoting research in areas of interest to it in American and European higher education institutions, but this is the first time that it is supporting Brazilian projects. The funding does not require the assignment of the intellectual property rights to the research. “During the selection process, we sent invitations to 25 researchers and received 20 proposals. We chose the five that were of a quality commensurate with the Google brand,” explains Ribeiro-Neto.

One of the projects funded is being carried out at the Institute of Postgraduate Studies and Research in Engineering (COPPE), of the Federal University of Rio de Janeiro (UFRJ), whose ultimate goal is to improve the quality of distance education in Brazil. The project intends to analyze the reactions of students in these courses during video classes and identify their attention levels. Led by Prof. Edmundo de Souza e Silva, coordinator of the Coppe research group, the data is collected using a series of sensors and devices connected to the students that provide information about their mental state during classes provided in video format. While a webcam captures the students’ facial expressions and pupil size, a bracelet containing a biosensor measures the conductivity of the skin, a headband captures brain waves, and a sensor measures the movement of the mouse. “The level of skin conductance and pupil dilation are indicators that the person is more or less attentive,” says Silva.

One of the projects funded is being carried out at the Institute of Postgraduate Studies and Research in Engineering (COPPE), of the Federal University of Rio de Janeiro (UFRJ), whose ultimate goal is to improve the quality of distance education in Brazil. The project intends to analyze the reactions of students in these courses during video classes and identify their attention levels. Led by Prof. Edmundo de Souza e Silva, coordinator of the Coppe research group, the data is collected using a series of sensors and devices connected to the students that provide information about their mental state during classes provided in video format. While a webcam captures the students’ facial expressions and pupil size, a bracelet containing a biosensor measures the conductivity of the skin, a headband captures brain waves, and a sensor measures the movement of the mouse. “The level of skin conductance and pupil dilation are indicators that the person is more or less attentive,” says Silva.

According to Silva, in a traditional classroom, the teacher can observe students’ reactions and see how attentive they are. But this is impossible in distance education courses. “The system we are developing is intended to help close that gap,” says Silva. During a video lesson, if the system concludes, based on the data sent by the devices (webcam, bracelet and sensors), that the student is inattentive, it automatically alters the course of the lesson by, for example, asking the student to do some task or by changing the content being displayed. Conducted jointly with Prof. Rosa Leão, from Coppe, PhD candidate Gaspare Bruno and master’s degree candidate Thothadri Rajesh, the study is initially focusing on students in the computing systems course offered by Cederj, a consortium of seven public institutions of higher learning in Rio de Janeiro, among them UFRJ and Fluminense Federal University (UFF).

Léo RamosExperiment carried out at UFRJ analyzes students’ level of attention in distance learningLéo Ramos

Another Brazilian project supported by Google seeks to understand what makes content posted on video sharing channel YouTube become popular. “We want to understand the various factors that may affect the audience for a video and thus predict its popularity curve over time,” says Prof. Jussara Almeida of the Department of Computer Science at UFMG. The research is part of the doctoral work of computer scientist Flavio Figueiredo. The methodology created at UFMG is capable of improving by more than 30% the average forecast popularity of videos, compared to the most renowned technique used for this purpose, developed by Bernardo Huberman, a researcher at HP Labs, in Palo Alto, California. During the study, hundreds of thousands of YouTube videos were monitored and various types of information were collected from the site, including video category, the visualization curve over time since uploading, and the origin of the links used to reach each video.

“By analyzing the viewing history of these videos, we found that a small number of popularity curve patterns repeat themselves. We realized that if we could predict this curve, we could improve our prediction and say how the popularity of certain content will evolve over time,“ says Almeida. One conclusion of the study was that the quality of the video content is not always a determinant of its popularity. Often, the video “goes viral” on the web after a link to it is posted on an external site such as a blog or even Facebook. Understanding these dynamics can provide important information for advertisers of goods and services on the internet, in addition to content producers.

Google is also funding another project at the same UFMG Computer Science Department. Prof. Marcos André Gonçalves and PhD candidate Daniel Hasan used algorithms and computational techniques to automatically assess the quality of articles and content posted on web 2.0 sites, meaning sites whose pages are created through the collaboration of Internet users. The virtual encyclopedia Wikipedia, containing more than 14 million articles, was the initial focus of the study. “We started with Wikipedia and extended the study to question and answer forums,” explains Gonçalves. To determine the degree of reliability of the pages, the researchers developed a set of 68 quality criteria such as text readability, the structure and organization of the articles, and the history of revisions to the posted content. “We created an application, which is not yet commercial, that calculates a score for each of the criteria,” says the UFMG professor. Among the various existing methodologies that purport to do something similar, the one designed by Gonçalves and his student provides the best results when tested. “Our methodology could serve as a compass, showing the user which web content has greater quality and credibility. We imagine that, in the future, the application could be used to sort the pages returned in a search according to some criterion of reliability,” says Gonçalves.

Republish