Thanks to computer systems that obtain information from digital images and simulate human cognition, machines can already see, process, and interpret the world around them. Computer vision is an advanced engineering technology primarily used to monitor and analyze various processes and activities. Scientists from several countries, including Brazil, are investing in and developing the technology in order to expand its use. The aim is to incorporate it into systems that help diagnose diseases, devices that help people with speech problems communicate, and driverless cars, among other applications.

“Computer vision is closely associated with imaging and decision-making technologies, based on analytical or artificial intelligence algorithms,” says mechanical engineer Paulo Gardel Kurka, from the School of Mechanical Engineering at the University of Campinas (FEM-UNICAMP), one of Brazil’s biggest centers for research in this area. Studies on digital image processing systems made rapid progress in the 1970s thanks to advances in computer processing capacities and new electronic sensors capable of capturing and digitizing images. In the following decades, research into semiconductor materials and the miniaturization of electronics resulted in the creation of more sophisticated systems capable of more efficiently capturing, processing, and analyzing information from these images.

Computer vision technology is based on three image-processing stages. The first involves using a device, such as a camera, to capture the image. The image is recorded in a two-dimensional light sensor array, each element of which is capable of storing a binary numerical value that refers to its luminous intensity. The elements of this array correspond to the pixels used to display the image on a screen. “This data is then processed to improve the quality of the image and highlight or eliminate certain characteristics that provide no useful information for the intended purpose,” Kurka explains.

This second step uses techniques that identify and select the regions and elements of the array that contain the relevant data. “The processing stage defines the regions of interest used in subsequent analyses, which will adapt the characteristics of each image to the objectives of the application.” The last step is to recognize, analyze, and classify the data of interest. How this is achieved depends on the application.

The global image-processing market was worth US$11.9 billion in 2018 and could reach US$17.3 billion by 2023, according to marketing research firm Markets and Markets. Seven of the top 20 companies investing in this technology are based in the USA. One of them is Google, which is focusing on developing the technology for use in autonomous cars. The plan is to equip them with cameras and sensors capable of capturing, processing, and analyzing images, differentiating people from objects and helping them to navigate their environment. All in real time.

Great strides have also been made in the academic sector. Caltech and MIT, two of the world’s leading technology research centers, are working on image-processing systems with a range of applications. Some of the advances made at Caltech, for example, were used to create georeferencing devices for robots sent to Mars. At MIT, the technology is being used to identify objects in the dark, to improve human skills in robots, such as sensitivity and dexterity, and in autonomous cars.

Acta Visio

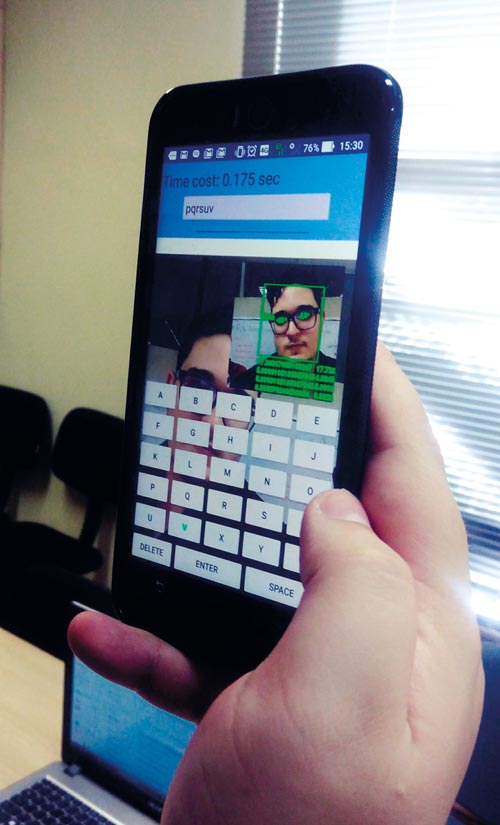

EyeTalk: smartphone app uses camera to detect eye movements and converts text to speechActa VisioIn Brazil, one of the most frequent applications of computer vision is in the monitoring of industrial processes, one of the pillars of industry 4.0. One such example is Autaza, a startup based in São José dos Campos, São Paulo State, which has created an industrial inspection system that uses cameras and artificial intelligence to photograph parts on a production line and identify possible defects.

Similar systems are also used in the Brazilian logging industry. Researchers from the São Paulo State University (UNESP) Itapeva campus and the Institute of Mathematical and Computer Sciences at the University of São Paulo (ICMC-USP) in São Carlos have created a technology capable of indicating the quality of the wood and the species of tree from which it came. The system, used by Sguario, a logging company based in Itapeva, São Paulo State, verifies that the wood is of legal origin and separates it by tree type, which determines the value of the wood.

Enhanced communication

Computer vision is also being used to help people with illnesses and physical disabilities. São Paulo–based startup Hoobox Robotics, founded in 2016 by researchers from UNICAMP, has created a facial recognition system that captures and translates facial expressions into commands to control the movement of a wheelchair. The technology, called Wheelie 7, can recognize more than 10 expressions, such as a raised eyebrow or a blink of the eyes. Using a camera to monitor the user’s face, the system captures the expressions and uses an algorithm to interpret them. The program then translates them into commands, such as moving forward or turning to the left.

The solution was developed with funding from the FAPESP Technological Innovation in Small Businesses (PIPE) program. For now, the wheelchair is only available in the USA, for a monthly subscription of US$300. Hoobox, in partnership with Albert Einstein Hospital in São Paulo, is testing the technology’s ability to detect human behavior, such as shakes or spasms, with patients in the intensive care unit (ICU).

Annals of Forest Science

Image-processing systems are used by loggers to indicate the quality of wood and the species of tree from which it cameAnnals of Forest ScienceIn a similar approach, mechanical engineer Marcus Lima, a researcher at FEM-UNICAMP, used computer vision to create a mobile application that uses the front camera to detect eye commands, enabling the user to communicate by converting text to speech. The app, called EyeTalk, is also the result of research funded by PIPE. According to Lima, he initially intended to create a system that could convert eye movements into commands to control drones. “While working on the project, I realized that I could adapt the technology to be used by people with speech impairments,” he recalls.

The solution works like a virtual keyboard, with keys that flash in sequence. The idea is simple: a tablet or smartphone running the application is attached to a stand in front of the user. The front-facing camera is pointed toward their eyes, which they blink to select letters, forming words and phrases. These phrases are then converted into audio as a digital voice. The technology is similar to the system used by British physicist Stephen Hawking (1942–2018), who suffered from amyotrophic lateral sclerosis (ALS) and spent most of his life immobilized in a wheelchair, unable to speak. “The problem,” says Lima, “is that the available models cost up to £15,000, which is unaffordable for most people.”

Today, Lima runs Acta Visio, a company he founded in 2017 to develop solutions based on computer vision. The researcher and his team are working on the prototype of an image-processing system that monitors hand hygiene among healthcare professionals. The aim is to reduce infections in hospitals and provide quantitative data that can help hospital managers evaluate sanitation procedures. The first tests will be conducted in April at the Cajuru University Hospital in Curitiba, in partnership with hospital management company 2iM.

Hoobox

Device created by Hoobox translates facial expressions into commands to control a wheelchairHooboxMore accurate diagnoses

Image-processing systems are also being used in the health sector to identify biomarkers, helping to diagnose and treat certain types of cancer, such as breast cancer. In many cases, tumors are discovered when the disease is already at an advanced stage. Early detection usually results from clinical examinations and mammography, which uses low-energy X-rays to identify potentially cancerous lesions. A biopsy of the suspicious tissue is often needed for a more accurate diagnosis. On average, of every eight biopsies performed, only one is positive.

Medical physicist Paulo Mazzoncini de Azevedo Marques, a professor of biomedical informatics at the USP School of Medicine in Ribeirão Preto, has developed an innovative technique capable of identifying patterns associated with this type of tumor, which could reduce the number of biopsies needed. “We used computer vision to create an algorithm that can detect and analyze microcalcifications—small crystals of calcium in the breast that appear as white specks on a mammogram—and indicates whether they are likely to be a benign or malignant [cancer] lesion,” he says. “The algorithm evaluates each pixel of the image in specific regions, and by extracting quantitative attributes, identifies variations that may be associated with a suspicious pattern.”

In the health sector, computer vision can help diagnose cancer and autoimmune diseases

The model can also be trained to recognize new patterns, to support diagnosis and treatment of lung tumors and autoimmune rheumatic diseases. Using artificial intelligence algorithms to analyze variations in the gray tones of images taken by X-ray, MRI, and CT scans, suspicious areas can be scrutinized in isolation, comparing certain characteristics with lesions identified in images of other confirmed cases.

“Our aim is for the system to accumulate data and learn from experience, establishing patterns that can help physicians recognize the most relevant areas of an image, their characteristics, and whether they are associated with more aggressive tumors,” explains the researcher. The solution is being fed with public information from local clinical databases. The objective is to train the system and refine its ability to recognize patterns associated with these diseases.

Projects

1. Automatic quality inspection of automobile bodies (nº 17/25873-8); Grant Mechanism Technological Innovation in Small Businesses (PIPE) program; Principal Investigator Jorge Augusto de Bonfim Gripp (Autaza Tecnologia); Investment R$1,452,695.60

2. Wheelie: Innovative technology for automatic wheelchairs (nº 17/07367-8); Grant Mechanism Technological Innovation in Small Businesses (PIPE) program; Principal Investigator Paulo Gurgel Pinheiro (Hoobox Robotics); Investment R$723,814.04.

3. Design, development, and assembly of a prototype eye tracking system embedded in first-person-view goggles to control a drone for quadriplegic individuals (nº 16/15351-1); Grant Mechanism Technological Innovation in Small Businesses (PIPE) program; Principal Investigator Marcus Vinicius Pontes Lima (Acta Visio); Investment R$57,974.44.

4. Development and implementation of domain ontology in radiology and image diagnosis for clinical practice in a teaching hospital (nº 11/08943-6); Grant Mechanism Regular Research Grant; Principal Investigator Paulo Mazzoncini de Azevedo Marques (USP Ribeirão Preto); Investment R$55,731.32.